A Practical White Paper for Technical Architects from Apptad

Informatica’s-native MDM SaaS platform now offers a low-latency Business Events Framework that can stream create, update, delete, and merge events seconds after they occur. For enterprises building event-driven architectures on Microsoft Azure, the optimal target for these events is Azure Event Hubs: a fully managed, Kafka-compatible ingestion service that reliably handles millions of messages per second. This white paper distills the design patterns, configuration steps, and operational guardrails required to connect MDM SaaS to Event Hubs in real time, enabling Apptad architects to deliver responsive, scalable data pipelines for customers.

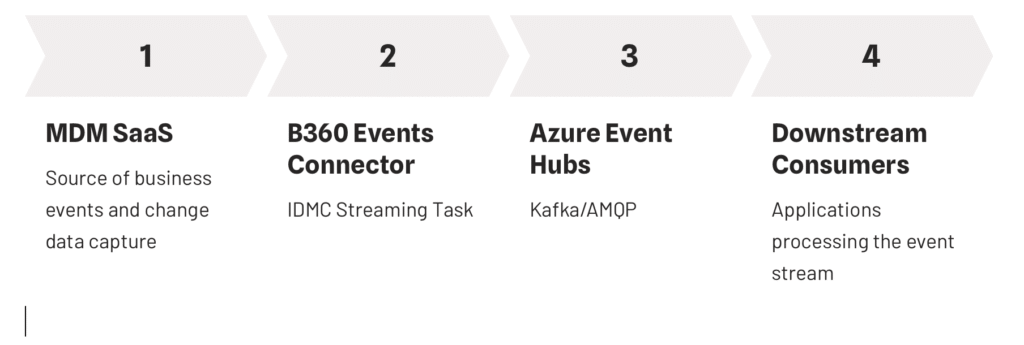

Architecture Overview

Event Source: Informatica MDM SaaS

MDM’s Business Events Framework publishes system- or user-triggered change-data-capture (CDC) events into an internal collection. A Real-Time Publishing option streams those events immediately—no batch job or polling required—via Informatica Intelligent Data Management Cloud (IDMC).

Transport: IDMC Streaming Connectors

- B360 Events Connector (Cloud Data Integration or Mass Ingestion Streaming) reads the internal event collection and serializes each record as Avro or JSON.

- Azure Event Hubs Connector transmits the serialized payload to an Event Hub namespace using AMQP or the Kafka protocol, with optional checkpointing to Azure Blob Storage for exactly-once semantics.

Event Sink: Azure Event Hubs

Event Hubs partitions the stream for parallel processing and supports polyglot consumers—including Azure Functions, Stream Analytics, and Databricks—without code changes thanks to its Kafka-compatible endpoint.

Step-by-Step Implementation

1. Enable Business Events in MDM SaaS

- Open Business Events in Customer 360.

- For each Business Entity (e.g., Party, Address), select the actions that must raise events (CREATE, UPDATE, DELETE, MERGE).

- Choose Real-Time as the delivery mode to populate the event collection instantly.

2. Create Connections in IDMC Administrator

| Connection | Key Properties | Notes |

|---|---|---|

| B360 Events | Org URL, OAuth credentials, event version (v2) | Captures the internal CDC stream. |

| Azure Event Hubs | Namespace FQDN, Shared Access Key, Event Hub name, Blob checkpoint container | Supports Kafka or AMQP; configure Send permission. |

3. Build the Streaming Task

- Source: B360 Events connection.

- Mapping: Optionally enrich or filter the payload; retain the primary keys and action codes for downstream consumers.

- Target: Azure Event Hubs connection.

- Throughput Tuning:

- Batch size: 500–1,000 events.

- Concurrency: 4–8 threads (aligned with Event Hub partitions).

- Deployment: Assign to a Secure Agent group with low-latency network to Azure.

- Monitoring: Use IDMC’s job console or REST API; enable auto-restart on failure.

4. Configure Azure Event Hubs

| Parameter | Recommendation | Rationale |

|---|---|---|

| Tier | Standard for ≤20 MB/s; Dedicated or Premium for higher volumes or dynamic partition scaling. | Guarantees throughput. |

| Partitions | Start with 4–8; match IDMC concurrency. | Preserves order per partition key. |

| Capture | Enable if a replayable data lake is required (Avro files on ADLS). | Simplifies replay. |

| Consumer Groups | One per downstream application (e.g., “realtime-API”, “analytics-sink”). | Isolates offsets. |

5. Downstream Consumption Patterns

- Microservices: Use Kafka SDK or Azure Functions with Event Hub trigger to update operational stores in near real time.

- Analytics: Stream Analytics or Databricks Structured Streaming can materialize the event flow into Synapse or Delta Lake for reporting.

- Audit & Replay: With Capture enabled, any consumer can replay from a given timestamp using

EventPosition.FromEnqueuedTime().

Security and Governance Considerations

- Network Isolation: Deploy the Event Hub namespace inside a dedicated Azure VNet and expose it to IDMC via Private Link or IP allow-lists.

- Authentication: Use Shared Access Policies with least-privilege Send rights for the connector and Listen rights for consumers.

- Schema Evolution: Store Avro schemas in Azure Schema Registry and embed schema IDs in message headers to ensure forward compatibility.

- Data Privacy: Mask or hash sensitive attributes before publishing, or restrict them via MDM’s field-level security.

- Lineage: Capture event metadata (source GUID, version, timestamp) to maintain traceability for audits.

Operational Best Practices

| Topic | Guideline |

|---|---|

| Throughput Testing | Run synthetic load to verify partition balance; add partitions only in Premium tier. |

| Duplicate Handling | Event Hubs delivers at-least-once; design idempotent consumers or use record versioning. |

| High Availability | Enable geo-disaster recovery at namespace level for cross-region replication. |

| Cost Control | Tune IDMC task batch size vs. latency; Event Hubs charges per throughput unit and captured storage. |

| Alerting | Wire Azure Monitor metrics (IncomingMessages, ThrottledRequests) to Apptad’s observability stack. |

Value Proposition for Apptad

Apptad’s status as an Informatica Platinum Partner and Microsoft Solution Integrator positions the team to deliver turnkey, real-time MDM streaming solutions. Combining MDM SaaS events with Azure Event Hubs enables:

- Sub-second Data Propagation to downstream apps—critical for omnichannel customer experiences.

- Decoupled Microservices where producers and consumers evolve independently, reducing integration debt.

- Unified Analytics Fabric by funneling golden-record changes directly into Synapse, Databricks, or Power BI for continuous insights.

- Regulatory Confidence through immutable event logs and full lineage, supporting trace-back for audits in life-sciences or finance use cases.

Conclusion

Real-time publishing from Informatica MDM SaaS to Azure Event Hubs creates an efficient, cloud-native backbone for event-driven data architectures. By following the connection patterns, security controls, and scaling techniques outlined above, Apptad architects can deliver responsive, auditable, and future-proof master-data pipelines that unlock immediate business value while laying the groundwork for advanced AI and analytics workloads.